I was working on a Microsoft 365 tenant report and had used PowerShell PnP module. But since getting info about total storage capacity within the tenant requires write permissions to all SharePoint sites, I wanted to look into another way of getting this insights via code.

I use a report that can be pulled from get-mgreport and then I pull all licenses in the tenant with Get-MgSubscribedSku. I then calculate and compare to my numbers about the amount of storage that each license brings to the table besides the 1TB of storage that is in the tenant initially.

You can run the script without creating an app registration with certificate, but I prefer this way of authentication then combining with DevOps to run as application.

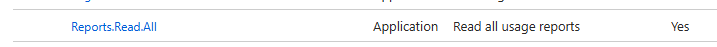

If you are using an app registration like I do, you need to assign the following Microsoft Graph permissions for the application:

The script looks like this:

param (

[Parameter(Mandatory)]

[string]$AppId,

[Parameter(Mandatory)]

[string]$Certificate,

[Parameter(Mandatory)]

[string]$MicrosoftCustomerId

)

# Import Graph Powershell Module

Import-Module -Name Microsoft.Graph.Authentication

$GraphAuthModule = get-module Microsoft.Graph.Authentication

write-host "##Debug: Microsoft Graph Module is" $GraphAuthModule

if($Null -eq $GraphAuthModule)

{

write-host "Graph Authentication Module missing"

exit

}

#Connect to Graph PowerShell SDK using certificate and App Registration

Connect-MgGraph -ClientId $AppId -Certificate $certificate -TenantId $MicrosoftCustomerId

Get-MgReportSharePointSiteUsageStorage -Period "D30" -outFile "$pwd\AllSharePointSiteUsageStorage.csv"

$AllSharePointSiteUsageStorage = import-csv -path "pwd\AllSharePointSiteUsageStorage.csv" -Delimiter ","

Remove-Item -path "$pwd\AllSharePointSiteUsageStorage.csv"

$SharePointUsedStorage = $AllSharePointSiteUsageStorage[0]."Storage Used (Byte)" / 1000000

$SharePointStorageResponseGeoUsedStorageGB = $SharePointUsedStorage / 1000 # number in GB

# Pull all service plans and count them to get numbers of service plans with SharePoint Storage included

$licenses = Get-MgSubscribedSku

$licenses.ServicePlans

$Units_SPS = 0

$Units_SPE = 0

$Units_ODB = 0

$Units_SWAC = 0

$Units_SPST = 0

foreach ($l in $licenses)

{

#$ConsumedUnits = $l.ConsumedUnits

$ServicePlans = $l.ServicePlans

foreach($SP in $ServicePlans)

{

if($SP.ServicePlanName -eq "SHAREPOINTSTANDARD")

{

$Units_SPS = $l.ConsumedUnits + $Units_SPS

}

if($SP.ServicePlanName -eq "SHAREPOINTENTERPRISE")

{

$Units_SPE = $l.ConsumedUnits + $Units_SPE

}

if($SP.ServicePlanName -eq "ONEDRIVE_BASIC")

{

$Units_ODB = $l.ConsumedUnits + $Units_ODB

}

if($SP.ServicePlanName -eq "SHAREPOINTWAC")

{

$Units_SWAC = $l.ConsumedUnits + $Units_SWAC

}

}

if($l.SkuPartNumber -eq "SHAREPOINTSTORAGE")

{

$Units_SPST = $l.PrepaidUnits.Enabled

}

}

$StorCAL = 1048576 # number in MB - 1 TB

$StorCAL = $StorCAL + $Units_SPS * 10240 # each SharePoint Standard adds 10 GB

$StorCAL = $StorCAL + $Units_SPE * 10240 # each SharePoint Enterprise adds 10 GB

$StorCAL = $StorCAL + $Units_ODB * 512 # each OneDrive Basic adds 10 GB

$StorCAL = $StorCAL + $Units_SWAC * 1024 # each SharePoint Web adds 10 GB

$StorCAL = $StorCAL + $Units_SPST * 1024 # each SharePoint Storage adds 1 GB

$StorCAL = $StorCAL / 1000 # number in GB

$SharePointStorageResponseGeoAvailableStorageGB = $StorCAL

# Calculate the percentage of used storage

$SharePointUsagePercentance = ($SharePointStorageResponseGeoUsedStorageGB/$SharePointStorageResponseGeoAvailableStorageGB).ToString("P")

write-host "Geo Used Storage in GB:" + $SharePointStorageResponseGeoUsedStorageGB

write-host "Geo Storage Capacity in GB:" + $SharePointStorageResponseGeoAvailableStorageGB

write-host "Percentage Used:" + = $SharePointUsagePercentanceThe output of the script will write percentage to console:

Comments